1. Short Description

Video

based Object Detection (VOD) and Activity Recognition (AR) module is responsible

for interpreting the video input provided to it. It's action is three-fold

meaning that 3 submodules exists which complement each other in their task. The

submodules include 2 object detectors and 1 activity recognizer. Both detectors

perform the same task, but they are used differently. More specifically, the

first one is Video-Based Object Detection (VOD) which is a generic object

detection tool for video input. It is trained to detect any instance of objects

of interest inside each frame. It is designed to be executed on dedicated work

station which is equipped with GPU hardware. The second one is Object Detection at

the Edge (ODE) which is a specially designed object detector targeted at

functioning on on-board GPU facilities like the ones found in UAVs. Last, the

Activity Recognition module (AR) is responsible for recognising any action of

interest which is depicted in the video inputs.

2. Main purpose and Benefits

As

mentioned before the main purpose of the module is to accurately and timely

interpret the image scenery provided to it in an automatic way. This means the

analysis is performed automatically, without human involvement and, thus, it

can easily be integrated in the project's pipeline. The input of the module is

video provided to it and the respected output are a list of all objects of

interest detected inside it. The term detected is referring to both recognise

(identify in which class each object belongs to) as well as localise (indicate

where in the frame the object has been spotted). By combining this information

an analysis of the monitored situation can be deducted. Also, since the

detection is performed in a frame level, which means a sequence of consecutive image is

being processed, the tool is able to provide time information along with spacial one. As a

result, the module ultimately is able to provide spacial information (where in

the image an object has been detected), time information (at which frame the

objects had been detected and for how long) and finally what type of object is

the detected one (in which category the detected object belongs to). A clarification here

regarding the classes, the term object in fact includes all detectable entities

regardless of being alive or not (so human and animal if required can be detected

too). In fact, person is typically of crucial importance for scene analysis

since most actions are performed by humans and, thus, it is really important to

be informed when a person instance is present.

Regarding

the benefits of using the module, there are a lot that contribute to the

module's importance. At first, the module is fully automatic meaning it can

cover an area in constant way and, thus, excel any human ability to monitor the

same area. Second, it is not affected by fatigue or time of day and performs

flawlessly at all times of the day. Third, for the ODE submodule specifically,

since the input is provided by UAVs it can cover areas which are difficult to

be accessed by humans and, thus, provide an accurate and timely alert if necessary.

It can easily be incorporated in modular pipelines and perform in various

levels of alert status. The accumulated detected objects information can be

further analysed in order to acquire a more thorough analysis of the monitored

scenery which provided the opportunity of a second level analysis.

3. Main Functions

The main

functionality of the module is also divided in three parts since those are the

submodule that comprise the module.

VOD is

responsible for detecting and reporting all objects of interest inside each

frame. The analysis is performed on a frame level which means that each frame is analysed

independently from the others. Nevertheless, there is a connection between consecutive frames via

a tracker. A tracker is a module which is responsible for the updating of the

relative position of each object through each processing frame. In other words,

a tracker connects the objects being detected in a frame with the objects being

detected in the following (or previous frames). Thus, the use of tracker

expands the module to the time dimension also and, additionally, provides the whole

pipeline with a functionality for inspecting the monitored area in time

dimensionality. VOD and ODE use the same tracker to function while AR do not

require any tracker because it already functions in time space. In fact, AR is

responsible for recognizing the performed action in a sequence of frames and,

thus, it requires a sequence of frames to be provided just to operate.

3.1 Function 01

As

mentioned before, VOD and ODE are both detectors and operate in a similar

fashion. They process video inputs while their output is two-fold:

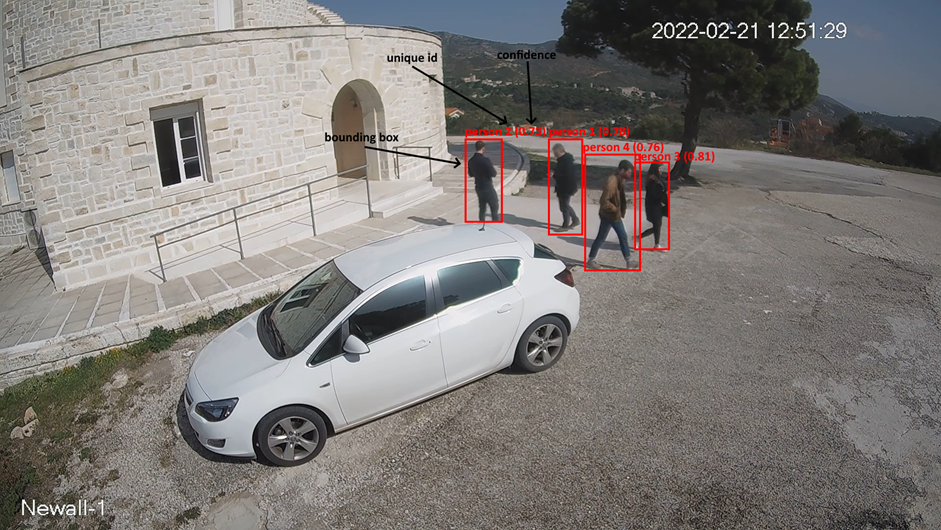

They

report the exact location every object has been detected inside the image

(relative frame coordinates) and also the label each object the module believes

it belongs to. Along with the

class label the module also provides a confidence score about how certain the

module is regarding the label assignment. Finally,

through the integrated tracker, the objects are connected to consecutive frames

by keeping the same object id in order to recognize that an object has simply

changed position and it's not a newly appeared object.

Figure 1. The output of VOD or ODE

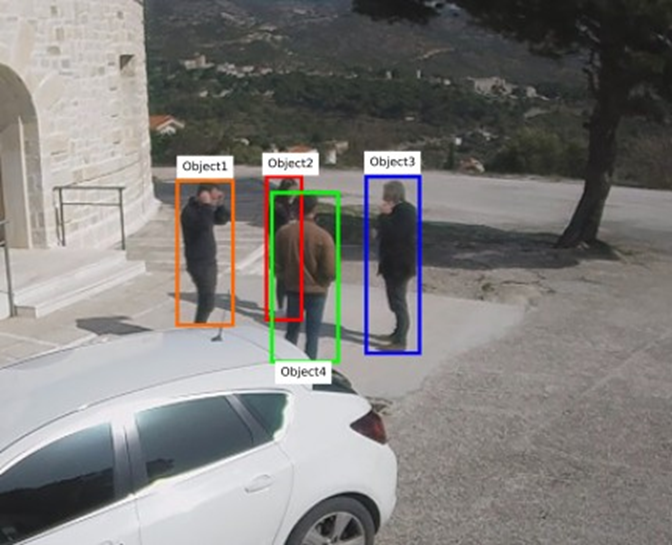

Figure 2 Object ids and assigned by the tracker3.2 Function 02

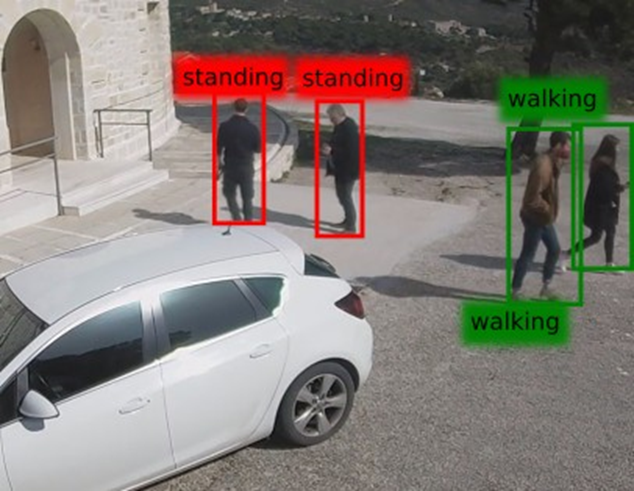

AR operates as an extension of VOD

and it has some differences from the detectors. First, it functions on a

sequence of frames and, thus, no tracker is being used or need per se. Also,

since it operates on a time space it inherently provides information about the

evolution of the actions performed by every person.

Figure 3 The output of AR submodule4. Integration with other Modules

The VOD, ODE and AR are backend modules and do not

acquire any user interface for direct communication with the user.

There are two ways of communicating with the rest

of the modules.

1) The first and more common approach is by

using the project’s messaging system. Through this functionality the module can

be triggered (if needed) and also propagate its outputs. Since the VOD

submodule is expected to be functioning on CCTV cameras the functionality of

triggering the submodule is not enabled. The module when launched consumes the

video stream propagated from the cameras and proceed with the analysis of the

frames. After analysing a number of frames, it collects the results (the

detected objects found on these frames) and propagates them into Geospatial

Complex Event Processing Engine (G-CEP) module. AR functions in a similar

fashion and propagates its output also on G-CEP. Also, for the VOD submodule

there is the possibility to store snapshots of the annotated frames (i.e. frame

on which the detected objects have been annotated using bounding boxes).

2) A faster and more direct communication is

used by ODE submodule for communicating with the UAVs via User Datagram

Protocol (UDP) messages. The information gathered this way are the telemetry of

the UAV which is incorporated in the output message as well as signals for starting

and stopping the detection. The UDP messages are solely used as input for the

ODE submodule while the output is propagated the same way as in VOD and AR

cases via the messaging system.

5. Infrastructure Requirements

All 3 submodules can be operated both locally as

well as remotely bit the latter case usually inserts some delay in the video

streaming and, thus, the whole pipeline.

The main requirement for the operation of the

module is the existence of a dedicated GPU for performing the core detection

operations. The existence of GPU is not a requirement per se but it is highly

advisable to be available since in other case the efficiency of the module is

reduced significantly and the whole pipeline is underperforming.

6. Operational Manual

6.1 Set-up

The

module can be launched using either a docker container or a virtual environment

with all the required packages preinstalled. Since, there is the necessity for

GPU card availability the module is tested on specific workstations which have

this hardware.

The

typical execution requires for a functional video stream video link to be

provided to the VOD submodule. This means that wherever the VOD is being

executed from there should be a functional video stream available. Usually,

this depends on each premise and the way its security has been constructed and

involves either a private network, a firewall bypassing mechanism etc. AR does

not require any video stream since it operates on the VOD output. ODE uses the

video captured from the UAV camera and, thus, for processing the frames no

additional requirements exist.

For all

submodules an active connection to the internet (or equivalently to the place

the messaging system is installed) is required in order to publish the results

of the module.

6.2 Getting Started

As long

as the requirements regarding connectivity are fulfilled the module can operate

and consume any video stream provide to it.

6.3 Nominal Operations

The

module typically responds to any ping being sent to it about being available in

the project’s pipeline.

Regarding

the VOD submodule the required data are the video streams being propagated. AR

submodule does not require any additional data, while ODE acquires the required

data from the UAV itself (through UDP messages).

6.3.3 User Inputs

No direct

user inputs are being inserted in the module.

6.3.4 User Output

The

output of the module is being propagated to the users through various intermediate

modules like G-CEP, SPGU and CRCL modules. Additionally, the end

users are allowed to inspect screenshots of the detected objects by using the

provided links in the propagated messages.